Technologies involved in distributed computing

Saturday, 8 September 2012

Technologies involved in distributed computing: Distributed computing and how each technology diff...

Technologies involved in distributed computing: Distributed computing and how each technology diff...: Distributed computing and how each technology differs from one another. Introduction: Distributed computing is the next steps in comp...

Distributed computing and how each technology differs from one another.

Distributed computing and how each technology differs from one another.

Introduction:

Distributed computing is the next steps in computer progress, where

computers are not only networked, but also smartly distribute their workload

across each computer so that they stay busy and don't squander the electrical

energy they feed on. When we combine the concept of distributed computing with

the tens of millions of computers connected to the Internet, we’ve got the

fastest computer on Earth.

Definition: A field of computer science in which we can study about the

distributed systems is referred to as Distributed Computing. It is a method of

computer processing in which different parts of a program are run

simultaneously on two or more computers that are communicating with each other

over a network. In

this computing one computer communicate with other to achieve a common target. Distributed

computing is a type of segmented or parallel computing, but the latter term is

most commonly used to refer to processing in which different parts of a program

run simultaneously on two or more processors that are part of the same

computer. While both types of processing require that a program be

segmented—divided into sections that can run simultaneously, distributed

computing also requires that the division of the program take into account the

different environments on which the different sections of the program will be

running.

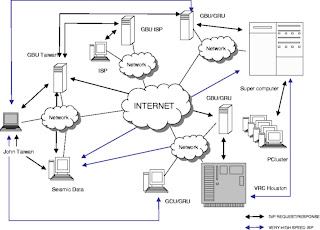

Fig a: Architecture

of distributed computing.

Technologies

involved in distributed computing:

It all

began with RPC:

RPC stands for Remote Procedure Call (RFC 1831),

first distributed computing technology to gain wide spread. The idea behind RPC

is to make a function call to a procedure in another process and address space

either on the same processor or across the network on another processor without

having to deal with the concrete details of how this should be done besides

making a procedure call.

Before an RPC call can be made, both the client

and the server both have to have stubs for the remote function that

are usually generated by an interface definition language (IDL). When

an RPC call is made by a client the arguments to the remote function are marshalled

and sent across the network and the client waits until a response is sent by

the server. There are some difficulties with marshalling certain arguments such

as pointers since a memory address on a client is completely useless to the

server so various strategies for passing pointers are usually implemented the

two most popular being

a.) Disallowing pointer arguments and

b.) Copying what the pointer points at and

sending that to the remote function.

An RPC function locates the server in one of two ways:

An RPC function locates the server in one of two ways:

- Hard

coding the address of the remote server which is extremely inflexible and

may require a recompile if that server goes down.

- Using

dynamic binding where various servers export whatever

interfaces/services they support and clients pick which server that they

want to use out of those that supports whatever service is needed.

RPC programs, as well as other distributed

systems, face a number of problems which are unique to their situation such as

Ø Network packets containing client requests being lost.

Ø Network packets containing server responses being lost.

Ø Client being unable to locate its server.

The need for distributed object and component

systems

CORBA

The Common Object Request Broker Architecture (CORBA) is a standard defined by the Object Management Group (OMG) that enables software components written in multiple computer languages and running on multiple computers to work together (i.e., it supports multiple platforms).A CORBA application usually consists of an Object Request Broker (ORB), a client and a server. An ORB is responsible for matching a requesting client to the server that will perform the request, using an object reference to locate the target object. When the ORB examines the object reference and discovers that the target object is remote, it marshals the arguments and routes the invocation out over the network to the remote object's ORB. The remote ORB then invokes the method locally and sends the results back to the client via the network. There are many optional features that ORBs can implement besides merely sending and receiving remote method invocations including looking up objects by name, maintaining persistent objects, and supporting transaction processing. A primary feature of CORBA is its interoperability between various platforms and programming languages.

The first step in creating a CORBA application is to define the interface for the remote object using the OMG's interface definition language (IDL). Compiling the IDL file will yield two forms of stub files; one that implements the client side of the application and another that implements the server. Stubs and skeletons serve as proxies for clients and servers, respectively. Because IDL defines interfaces so strictly, the stub on the client side has no interacting with the skeleton on the server side, even if the two are compiled into different programming languages, use different ORBs and run on different operating systems.

Then in order to invoke the remote object instance, the client first obtains its object reference via the Orb. To make the remote invocation, the client uses the same code that it would use in a local invocation but use an object reference to the remote object instead of an instance of a local object. When the ORB examines the object reference and discovers that the target object is remote, it marshals the arguments and routes the invocation out over the network to the remote object's ORB instead of to another process within the on the same computer.

CORBA also supports dynamically discovering information about remote objects at runtime. The IDL compiler generates type information for each method in an interface and stores it in the Interface Repository (IR). A client can thus query the IR to get run-time information about a particular interface and then use that information to create and invoke a method on the remote CORBA server object dynamically through the Dynamic Invocation Interface (DII). Similarly, on the server side, the Dynamic Skeleton Interface (DSI) allows a client to invoke an operation of a remote CORBA Server object that has no compile time knowledge of the type of object it is implementing.

CORBA is often considered a superficial specification because it concerns itself more with syntax than with semantics. CORBA specifies a large number of services that can be provided but only to the extent of describing what interfaces should be used by application developers. Unfortunately, the bare minimum that CORBA requires from service providers lacks mention of security, high availability, failure recovery, or guaranteed behaviour of objects outside the basic functionality provided and instead CORBA deems these features as optional. The end result of the lowest common denominator approach is that ORBs vary so wildly from vendor to vendor that it is extremely difficult to write portable CORBA code due to the fact that important features such as transactional support and error recovery are inconsistent across ORBs. Fortunately a lot of this has changed with the development of the CORBA Component Model, which is a superset of Enterprise Java Beans.

As

distributed computing became more widespread, more flexibility and

functionality was required than RPC could provide. RPC proved suitable for

Two-Tier Client/Server Architectures where the application logic is either in the

user application or within the actual database or file server. Unfortunately

this was not enough, more and more people wanted a Three-Tier Client/Server

Architectures where the application is split into client application (usually a

GUI or browser), application logic and data store (usually a database server).

Soon people wanted to move to N-tier applications where there are several

separate layers of application logic in between the client application and the

database server.

The

advantage of N-tier applications is that the application logic can be divided

into reusable, modular components instead of one monolithic codebase.

Distributed object systems solved many of the problems in RPC that made large

scale system building difficult, in much the same way Object Oriented paradigms

swept Procedural programing and design paradigms. Distributed object systems

make it possible to design and implement a distributed system as a group of

reusable, modular and easily deployable components where complexity can be

easily managed and hidden behind layers of abstraction.

DCOM/COM+

Distributed Component Object Model (DCOM)is the distributed version of Microsoft's COM technology which allows the creation and use of binary objects/components from languages other than the one they were originally written in, it currently supports Java(J++),C++, Visual Basic, JScript, and VBScript. DCOM works over the network by using proxy's and stubs. When the client instantiates a component whose registry entry suggests that it resides outside the process space, DCOM creates a wrapper for the component and hands the client a pointer to the wrapper. This wrapper, called a proxy, simply marshals methods calls and routes them across the network. On the other end, DCOM creates another wrapper, called a stub, which unmarshals methods calls and routes them to an instance of the component.

DCOM servers object can support multiple interfaces each representing a different behaviour of the object. A DCOM client calls into the exposed methods of a DCOM server by acquiring a pointer to one of the server object's interfaces. The client object can the invoke the server object's exposed methods through the acquired interface pointer as if the server object resided in the client's address space.

Distributed Component Object Model (DCOM)is the distributed version of Microsoft's COM technology which allows the creation and use of binary objects/components from languages other than the one they were originally written in, it currently supports Java(J++),C++, Visual Basic, JScript, and VBScript. DCOM works over the network by using proxy's and stubs. When the client instantiates a component whose registry entry suggests that it resides outside the process space, DCOM creates a wrapper for the component and hands the client a pointer to the wrapper. This wrapper, called a proxy, simply marshals methods calls and routes them across the network. On the other end, DCOM creates another wrapper, called a stub, which unmarshals methods calls and routes them to an instance of the component.

DCOM servers object can support multiple interfaces each representing a different behaviour of the object. A DCOM client calls into the exposed methods of a DCOM server by acquiring a pointer to one of the server object's interfaces. The client object can the invoke the server object's exposed methods through the acquired interface pointer as if the server object resided in the client's address space.

Java RMI

Method Invocation (RMI) is a technology that allows the sharing of Java objects between Java Virtual Machines (JVM) across a network. An RMI application consists of a server that creates remote objects that conform to a specified interface, which are available for method invocation to client applications that obtain a remote reference to the object. RMI treats a remote object differently from a local object when the object is passed from one virtual machine to another. Rather than making a copy of the implementation object in the receiving virtual machine, RMI passes a remote stub for a remote object. The stub acts as the local representative, or proxy, for the remote object and basically is, to the caller, the remote reference. The caller invokes a method on the local stub, which is responsible for carrying out the method call on the remote object. A stub for a remote object implements the same set of remote interfaces that the remote object implements. This allows a stub to be cast to any of the interfaces that the remote object implements. However, this also means that only those methods defined in a remote interface are available to be called in the receiving virtual machine.

RMI provides the unique ability to dynamically load classes via their byte codes from one JVM to the other even if the class is not defined on the receiver's JVM. This means that new object types can be added to an application simply by upgrading the classes on the server with no other work being done on the part of the receiver. This transparent loading of new classes via their byte codes is a unique feature of RMI that greatly simplifies modifying and updating a program.

The first step in creating an RMI application is creating a remote interface. A remote interface is a subclass of java.rmi.Remote, which indicates that it is a remote object whose methods can be invoked across virtual machines. Any object that implements this interface becomes a remote object.

To show dynamic class loading at work, an interface describing an object that can be serialized and passed from JVM to JVM shall also be created. The interface is a subclass of the java.io.Serializable interface. RMI uses the object serialization mechanism to transport objects by value between Java virtual machines. Implementing Serializable marks the class as being capable of conversion into a self-describing byte stream that can be used to reconstruct an exact copy of the serialized object when the object is read back from the stream. Any entity of any type can be passed to or from a remote method as long as the entity is an instance of a type that is a primitive data type, a remote object, or an object that implements the interface java.io.Serializable. Remote objects are essentially passed by reference. A remote object reference is a stub, which is a client-side proxy that implements the complete set of remote interfaces that the remote object implements. Local objects are passed by copy, using object serialization. By default all fields are copied, except those that are marked static or transient. Default serialization behaviour can be overridden on a class-by-class basis.

Thus clients of the distributed application can dynamically load objects that implement the remote interface even if they are not defined in the local virtual machine. The next step is to implement the remote interface, the implementation must define a constructor for the remote object as well as define all the methods declared in the interface Once the class is created, the server must be able to create and install remote objects. The process for initializing the server includes; creating and installing a security manager, creating one or more instances of a remote object, and registering at least one of the remote objects with the RMI remote object registry, for bootstrapping purposes. An RMI client behaves similarly to a server; after installing a security manager, the client constructs a name used to look up a remote object. The client uses the Naming.lookup method to look up the remote object by name in the remote host's registry. When doing the name lookup, the code creates a URL that specifies the host where the server is running.

Method Invocation (RMI) is a technology that allows the sharing of Java objects between Java Virtual Machines (JVM) across a network. An RMI application consists of a server that creates remote objects that conform to a specified interface, which are available for method invocation to client applications that obtain a remote reference to the object. RMI treats a remote object differently from a local object when the object is passed from one virtual machine to another. Rather than making a copy of the implementation object in the receiving virtual machine, RMI passes a remote stub for a remote object. The stub acts as the local representative, or proxy, for the remote object and basically is, to the caller, the remote reference. The caller invokes a method on the local stub, which is responsible for carrying out the method call on the remote object. A stub for a remote object implements the same set of remote interfaces that the remote object implements. This allows a stub to be cast to any of the interfaces that the remote object implements. However, this also means that only those methods defined in a remote interface are available to be called in the receiving virtual machine.

RMI provides the unique ability to dynamically load classes via their byte codes from one JVM to the other even if the class is not defined on the receiver's JVM. This means that new object types can be added to an application simply by upgrading the classes on the server with no other work being done on the part of the receiver. This transparent loading of new classes via their byte codes is a unique feature of RMI that greatly simplifies modifying and updating a program.

The first step in creating an RMI application is creating a remote interface. A remote interface is a subclass of java.rmi.Remote, which indicates that it is a remote object whose methods can be invoked across virtual machines. Any object that implements this interface becomes a remote object.

To show dynamic class loading at work, an interface describing an object that can be serialized and passed from JVM to JVM shall also be created. The interface is a subclass of the java.io.Serializable interface. RMI uses the object serialization mechanism to transport objects by value between Java virtual machines. Implementing Serializable marks the class as being capable of conversion into a self-describing byte stream that can be used to reconstruct an exact copy of the serialized object when the object is read back from the stream. Any entity of any type can be passed to or from a remote method as long as the entity is an instance of a type that is a primitive data type, a remote object, or an object that implements the interface java.io.Serializable. Remote objects are essentially passed by reference. A remote object reference is a stub, which is a client-side proxy that implements the complete set of remote interfaces that the remote object implements. Local objects are passed by copy, using object serialization. By default all fields are copied, except those that are marked static or transient. Default serialization behaviour can be overridden on a class-by-class basis.

Thus clients of the distributed application can dynamically load objects that implement the remote interface even if they are not defined in the local virtual machine. The next step is to implement the remote interface, the implementation must define a constructor for the remote object as well as define all the methods declared in the interface Once the class is created, the server must be able to create and install remote objects. The process for initializing the server includes; creating and installing a security manager, creating one or more instances of a remote object, and registering at least one of the remote objects with the RMI remote object registry, for bootstrapping purposes. An RMI client behaves similarly to a server; after installing a security manager, the client constructs a name used to look up a remote object. The client uses the Naming.lookup method to look up the remote object by name in the remote host's registry. When doing the name lookup, the code creates a URL that specifies the host where the server is running.

Applications of Distributed

Computing:

There

are many applications of Distributed Computing which help us in every walk of

life and in all types of communications networks. Important applications are

given below

The telecommunication has a vital role in our life because it is based

on distributed computing such as Telecommunication and cellular networks,

computer network such as internet and different types of wireless networks.

1.

Airplane control towers and different type of

industrial applications are also based on such computing.

2.

Different kind of network applications are also

based on this type of computing such as Www (World Wide Web), peer to peer

networks, Distributed databases and many more.

3.

Distributed computing is also used with parallel

computation in different applications such as. Scientific Computing and Data

rendering in distributed graphics etc.

Advantages of Distributed

Computing:

1:Incremental growth: Computing power can be added in small

increments

2: Reliability: If one machine crashes, the system as a whole can still survive

3: Speed:

A distributed system may have more total computing power than a mainframe

4: Open system:

This is the most important point and the most characteristic point of a distributed system.

Since it is an open system it is always ready to communicate with other systems.

An open system that scales has an advantage over a perfectly closed and self-contained system.

increments

2: Reliability: If one machine crashes, the system as a whole can still survive

3: Speed:

A distributed system may have more total computing power than a mainframe

4: Open system:

This is the most important point and the most characteristic point of a distributed system.

Since it is an open system it is always ready to communicate with other systems.

An open system that scales has an advantage over a perfectly closed and self-contained system.

5: Economic:

It is economic and Microprocessors offer a better price/performance

than mainframes

Disadvantages of Distributed

Computing:

1: As

previously mentioned distributed systems will have an

inherent security issue.

2: Networking: If the network gets saturated then problems with

transmission will surface.

3: Software: There is currently very little less software support for

Distributed system.

4: Troubleshooting: Troubleshooting and diagnosing problems in a

distributed system can also become more difficult, because the

analysis may require connecting to remote nodes or inspecting

communication between nodes.

Other forms of Computing:

inherent security issue.

2: Networking: If the network gets saturated then problems with

transmission will surface.

3: Software: There is currently very little less software support for

Distributed system.

4: Troubleshooting: Troubleshooting and diagnosing problems in a

distributed system can also become more difficult, because the

analysis may require connecting to remote nodes or inspecting

communication between nodes.

Other forms of Computing:

Apart from Distributed Computing there are other forms of computing

like Grid computing, Parallel computing,

Mobile computing, Cloud computing, Cluster computing, utility computing etc.

Grid Computing:

A computational Grid is a hardware and

software infrastructure that provides dependable, consistent, pervasive and

inexpensive access to high end computational capabilities. On the other hand,

grid computing has some extra characteristics compared to distributed

computing. Grid is a software environment that makes it possible to share disparate,

loosely coupled IT resources across organizations and geographies. Using a

grid, your IT resources are freed from their physical boundaries and offered as

services. These resources include almost any IT component – computer cycles,

storage spaces, databases, applications, files, sensors, or scientific

instruments.

In grid computing, resources can be

dynamically provisioned to your users or applications that need them. Resources

can be shared within a workgroup or department, across different organizations

and geographies, or outside your enterprise. Grid is concerned to efficient utilization of

a pool of heterogeneous systems with optimal workload management utilizing

an enterprise's entire computational resources ( servers, networks, storage,

and information) acting together to create one or more large pools of computing

resources. There is no limitation of users, departments or originations in

grid computing.

The Globus Toolkit, currently at

version 5, is an open source toolkit for building computing

grids developed and provided by the Globus Alliance. The Globus

project provides open source software toolkit that can be used to build

computational grids and grid based applications. It allows sharing of computer power,

databases, and other resources securely across corporate, institutional and

geographic boundaries without sacrificing local autonomy.

Fig b: Architecture of GTK

Parallel Computing:

The simultaneous use of more than one

processor or computer to solve a problem is called as Parallel Computing. Parallel computations can be performed on

shared-memory systems with multiple CPUs, or on distributed-memory clusters

made up of smaller shared-memory systems or single-CPU systems. Coordinating the concurrent work of

the multiple processors and synchronizing the results are handled by program

calls to parallel libraries. Parallel Computing Toolbox lets you solve computationally and

data-intensive problems using multicore processors, GPUs, and computer

clusters. High-level constructs—parallel for-loops, special array types, and

parallelized numerical algorithms—let you parallelize MATLAB applications

without CUDA or MPI programming.

The toolbox provides twelve workers (MATLAB

computational engines) to execute applications locally on a multicore desktop.

Without changing the code, you can run the same application on a computer

cluster or a grid computing service (using MATLAB Distributed Computing

Server). You can run parallel applications interactively or in batch.

Fig b:

Architecture of parallel computing

Mobile Computing:

Mobile Computing is a generic term used

to refer to a variety of devices that allow people to access data and

information from where ever they are. Ex: mobile device. Mobile communication allows

transmission of voice and multimedia data via a computer or a mobile device

without having connected to any physical or fixed link. Mobile

communication is evolving day by day and has become a must have for

everyone. Mobile communication is the exchange of voice and

data using a communication infrastructure at the same time regardless of any

physical link. This journey of mobile communication

technology started from late nineties with the 1st generation mobile technology

has now reached till 3rd generation totally changes the canvas of

communication mode.

There are so many types of mobile computers, such as

laptops, PDAs, PDA phones and other mobility devices were introduced in the mid

of 1990s including wearable technology as well. And to use these types of

mobility equipment’s we need to use right technology to make it more secure and

reliable infrastructure. If we talk about the mobile

communication technologies we can count on many mobile technologies available

today such as 2G, 3G, 4G, WiMAX, Wibro, EDGE,

GPRS and many others.

Cloud Computing:

Cloud computing is a computing paradigm

shift where computing is moved away from personal computers or an individual

application server to a “cloud” of computers. Users of the cloud only need to

be concerned with the computing service being asked for, as the underlying

details of how it is achieved are hidden. This method of distributed computing

is done through pooling all computer resources together and being managed by

software rather than a human. It involves delivering hosted services over the

Internet. These services are broadly divided into three categories:

Infrastructure-as-a-Service (IaaS), Platform-as-a-Service (PaaS) and

Software-as-a-Service (SaaS).

Cloud computing is essentially

infrastructure as a service. Using resources from distributed

servers/computers, cloud computing frees you from the configuration of local

servers. The cloud is another movement towards making computing a service that

can be adjusted based on the needs of a business. For example, for a business in retail that might

need to utilize a large number of servers during the holiday seasons, but not

nearly as many after the holiday seasons, cloud computing is helpful by

allowing the business to aggregate server usage based on their needs.

Cloud computing has been gaining momentum,

particularly in the SaaS environment. Organizations are quickly realizing the

value in cutting costs through monthly subscriptions to software, rather than

outright purchasing it and having it become obsolete. When newer versions of

software become available, companies need to repurchase and sometimes increase

the amount of infrastructure needed to support the new software. With

cloud-hosted services, it is possible to utilize enterprise-level software

through subscription, without worrying about the cost of infrastructure or

upgrading to the latest version.

Fig c: Architecture of cloud

computing

Benefits of Cloud Computing:

Cloud Computing is helpful in multiple ways.

The primary benefits are that:

1) Time to market is significantly reduced.

2) Overall cost savings in maintenance costs

from having business-owned servers to maintain. Cloud computing services also

generally have a much higher guarantee for up-time that a smaller company

running its own servers usually cannot match.

3) Organizations can cut software licensing

and administrative costs through utilizing online services in the cloud, such

as SharePoint, Exchange Server, and Office Communications Server.

Cloud computing is ideal for a small to

medium size business that has large spikes in infrastructure needs at varying

times during the year, rather than a consistent need that in-house servers

could sufficiently fulfil. Services available include Windows

Azure, Amazon EC2, and Go Grid.

Cluster Computing:

A computer cluster is a group of

linked computers working together closely so that in many respects they

form a single computer. The components of a cluster are commonly, but not

always, connected to each other through fast local area networks. Clusters

are usually deployed to improve performance and/or availability over that

provided by a single computer, while typically being much more cost-effective

than single computers of comparable speed or availability. Clustering has

been available since the 1980s when it was used in DEC's VMS systems.

IBM's Sysplex is a cluster approach for a mainframe system.

Microsoft, Sun Microsystems, and other leading hardware and software companies

offer clustering packages that are said to offer scalability as well

as availability. As traffic or availability assurance increases, all or

some parts of the cluster can be increased in size or number.

Cluster computing can also be used as a

relatively low-cost form of parallel processing for scientific and other

applications that lend themselves to parallel operations.

Utility computing:

Conventional Internet hosting services have

the capability to quickly arrange for the rental of individual servers, for

example to provision a bank of web servers to accommodate a sudden surge in

traffic to a web site.

“Utility computing” usually envisions some

form of virtualization so that the amount of storage or computing power

available is considerably larger than that of a single time-sharing computer.

Multiple servers are used on the “back end” to make this possible. These might

be a dedicated computer cluster specifically built for the purpose of being rented

out, or even an under-utilized supercomputer. The technique of running a single

calculation on multiple computers is known as distributed computing.

Amazon Web Services (AWS), despite

a recent outage, is the current poster child for this model as it provides

a variety of services, among them the Elastic Compute Cloud (EC2), in which

customers pay for compute resources by the hour, and Simple Storage Service

(S3), for which customers pay based on storage capacity. The main benefit

of utility computing is better economics. Corporate data centres are

notoriously underutilized, with resources such as servers often idle 85% of the

time. Utility computing allows companies to only pay for the computing

resources they need, when they need them.

Subscribe to:

Posts (Atom)